Click here and press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (or add '?notes' to the url before the #)

Press m or double tap to slide thumbnails (menu)

Press ? at any time to show the keyboard shortcuts

Origins of Mind : 05

How do humans first come to know simple facts about particular physical objects?

Three requirements

- segment objects

- represent objects as persisting (‘permanence’)

- track objects’ interactions

Principles of Object Perception

- cohesion—‘two surface points lie on the same object only if the points are linked by a path of connected surface points’

- boundedness—‘two surface points lie on distinct objects only if no path of connected surface points links them’

- rigidity—‘objects are interpreted as moving rigidly if such an interpretation exists’

- no action at a distance—‘separated objects are interpreted as moving independently of one another if such an interpretation exists’

Spelke, 1990

three requirements, one set of principles

Three Questions

1. How do four-month-old infants model physical objects?

2. What is the relation between the model and the infants?

3. What is the relation between the model and the things modelled (physical objects)?

the Simple View

Uncomplicated Account of Minds and Actions

For any given proposition [There’s a spider behind the book] and any given human [Wy] ...

1. Either Wy knows that there’s a spider behind the book, or she does not.

2. Either Wy can act for the reason that there is, or seems to be, a spider behind the book (where this is her reason for acting), or else she cannot.

3. The first alternatives of (1) and (2) are either both true or both false.

A Problem

scientific intuitive arguments against the simple view

conflicting evidence: permanence

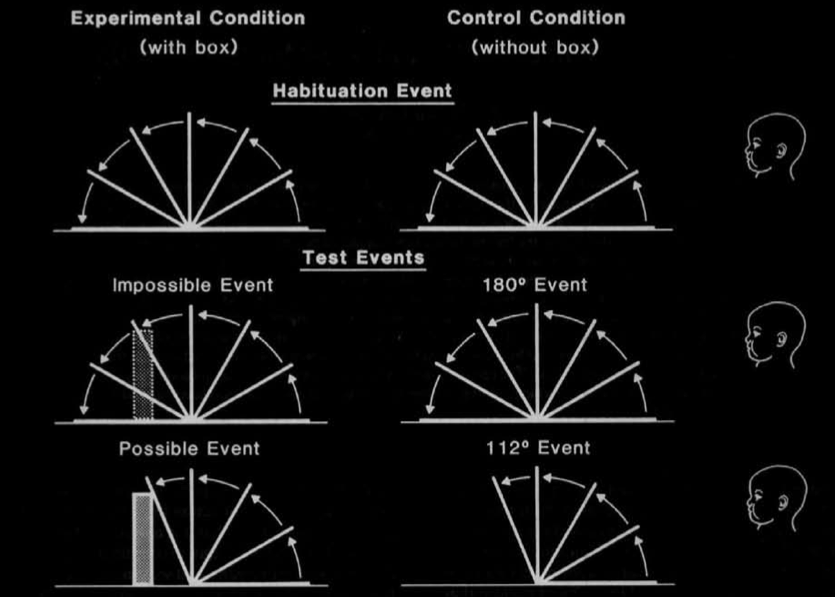

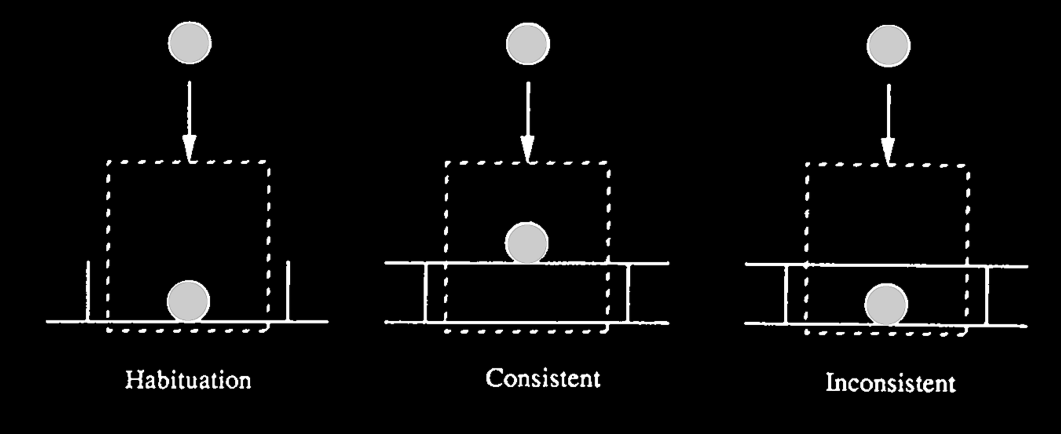

Baillargeon et al 1987, figure 1

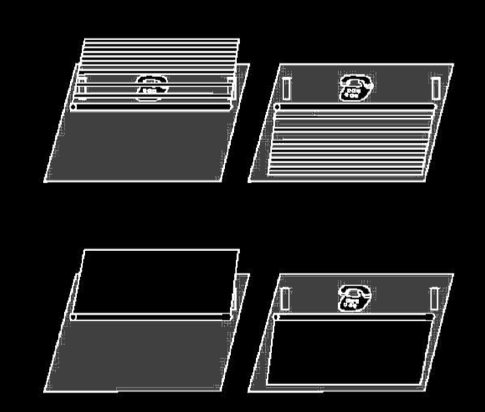

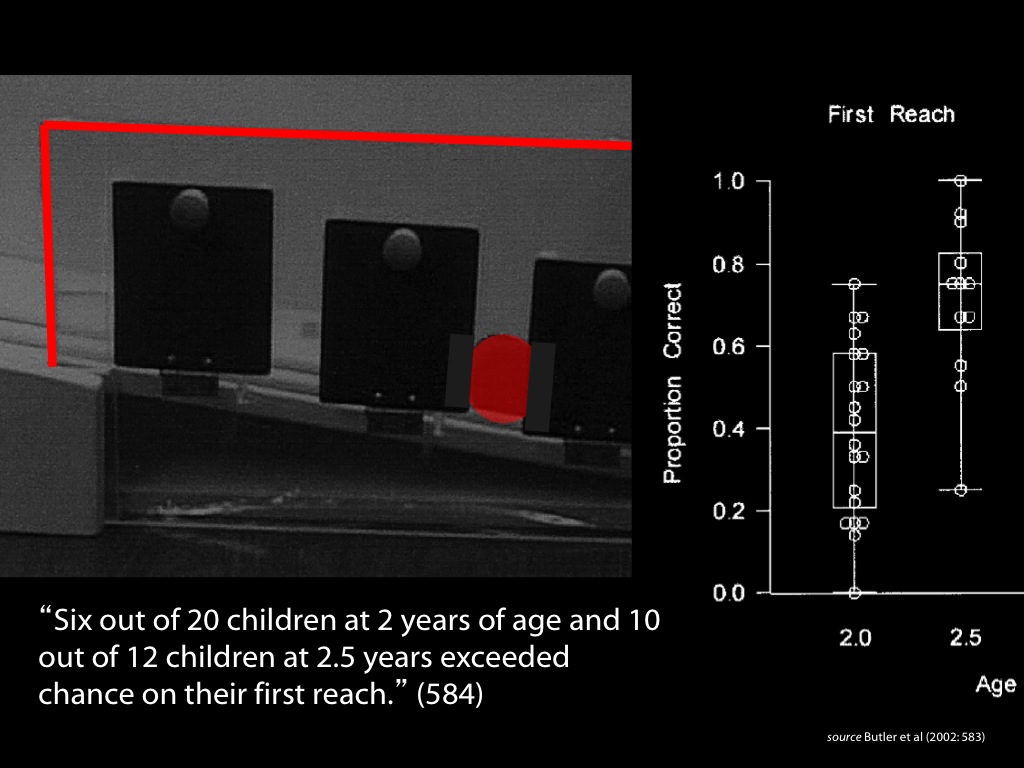

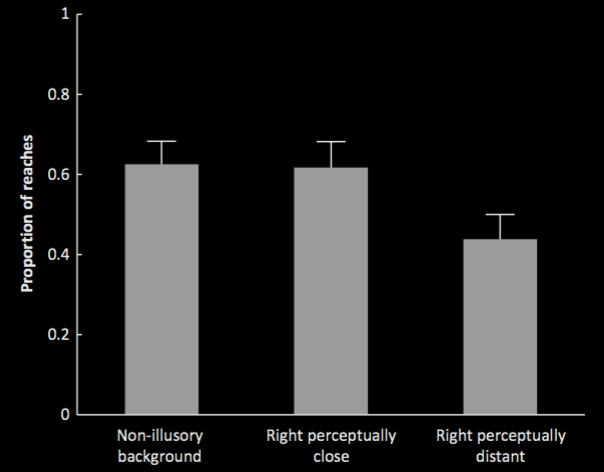

Shinskey and Munakata 2001, figure 1

Shinskey and Munakata 2001, figure 2

Responses to the occlusion of a desirable object

- look (from 2.5 months)

(Aguiar & Baillargeon 1999)

- reach (7--9 months)

(Shinskey & Munakata 2001)

‘action demands are not the only cause of failures on occlusion tasks’

Shinskey (2012, p. 291)

conflicting evidence: causal interactions

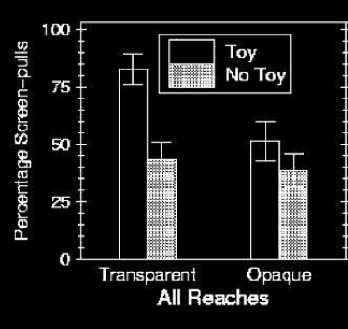

Spelke et al 1992, figure 2

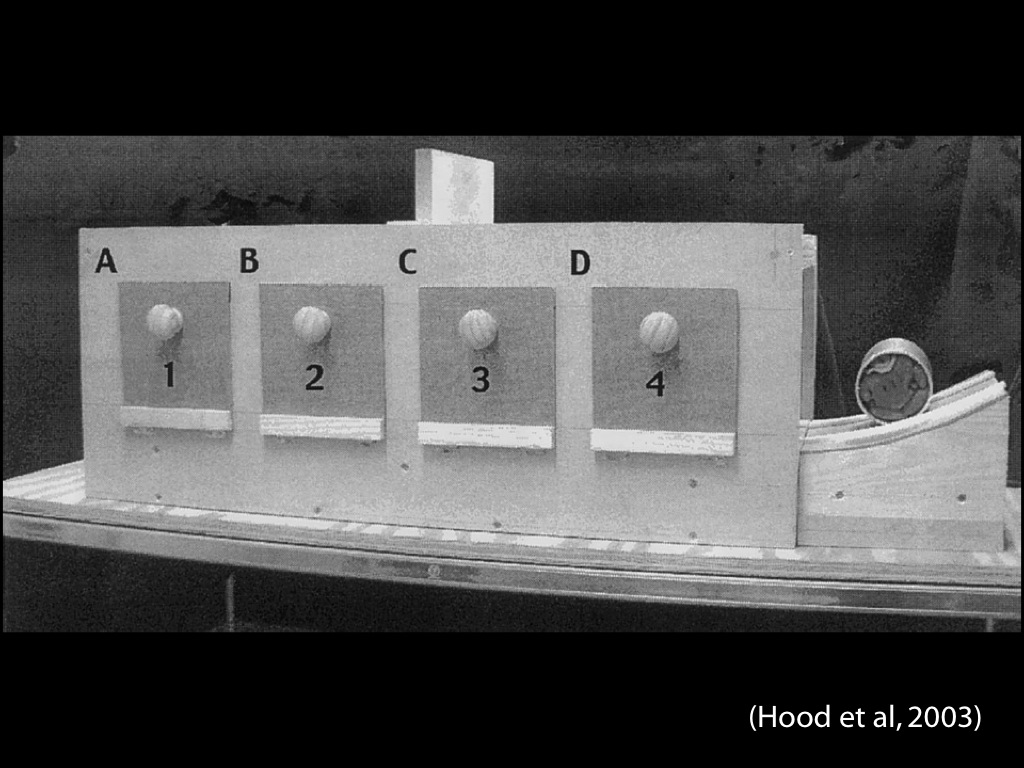

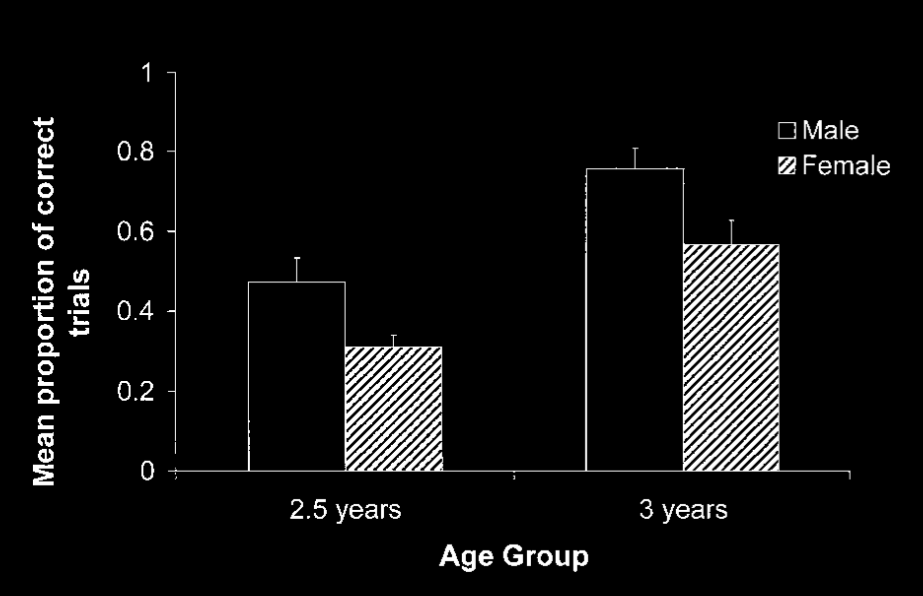

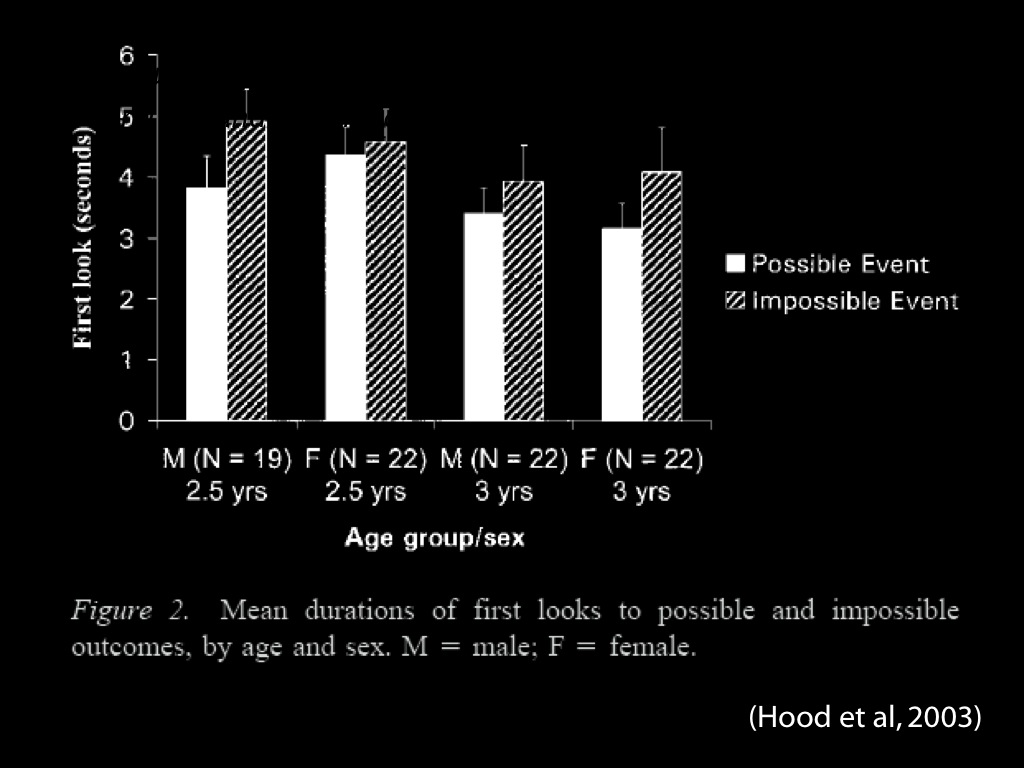

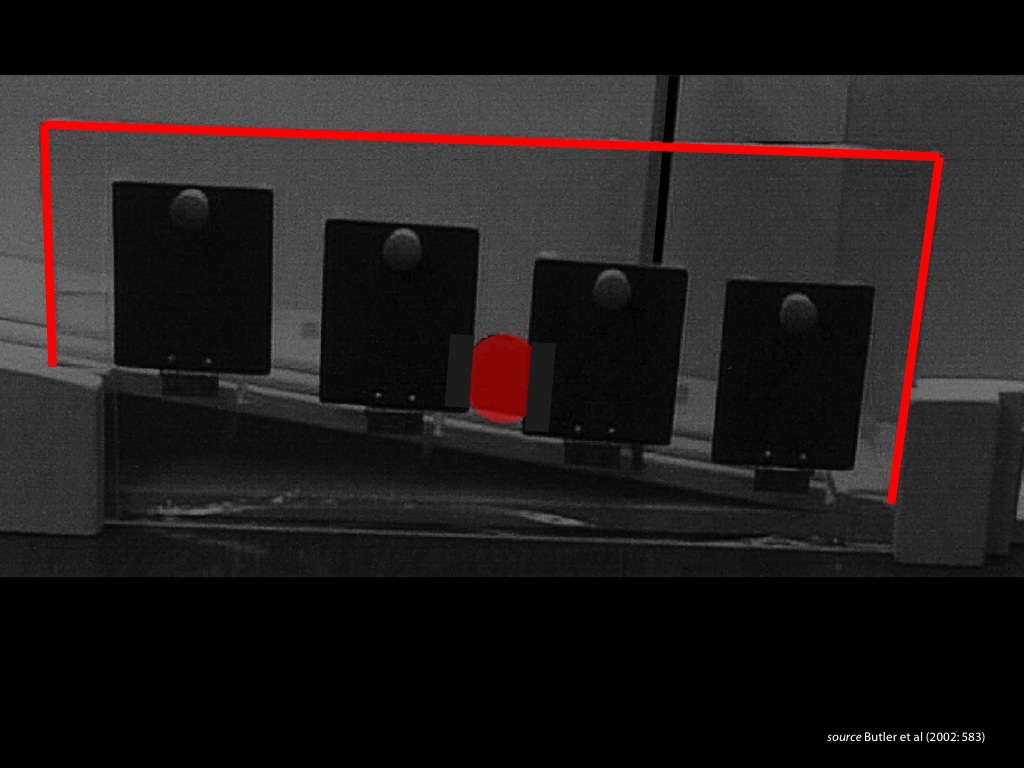

search

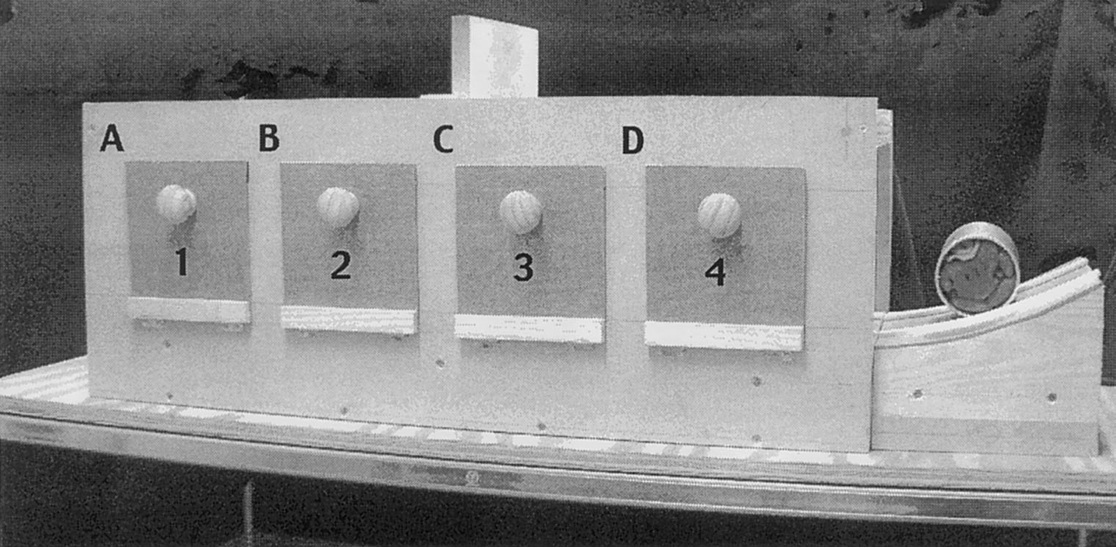

Hood et al 2003, figure 4

The Simple View

generates

multiple

incorrect predictions.

‘A similar permanent dissociation in understanding object support relations might exist in chimpanzees. They identify impossible support relations in looking tasks, but fail to do so in active problem solving.’

(Gomez 2005)

Likewise for cotton-top tamarins (Santos et al 2006) and marmosets (Cacchione et al 2012).

‘to date, adult primates’ failures on search tasks appear to exactly mirror the cases in which human toddlers perform poorly.’

(Santos & Hood 2009, p. 17)

What about the chicks and dogs?

The Simple View

generates

multiple

incorrect predictions.

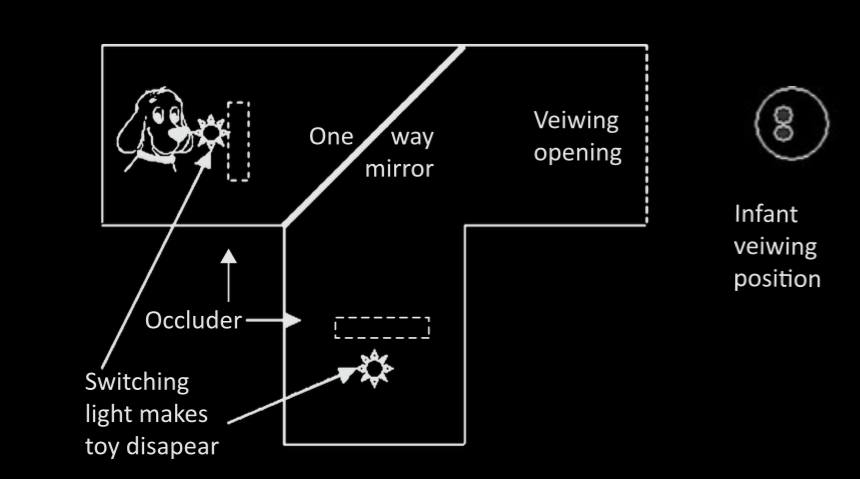

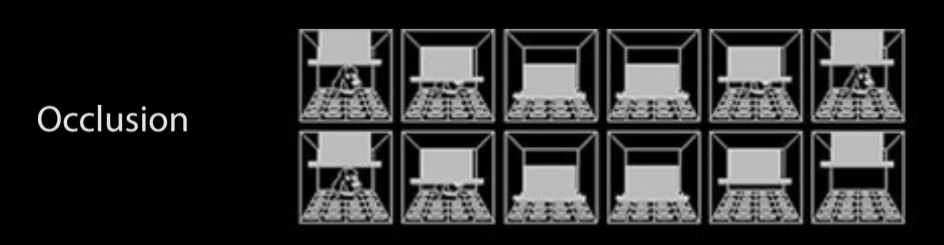

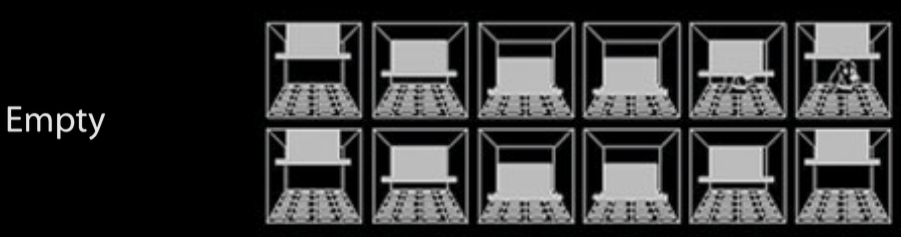

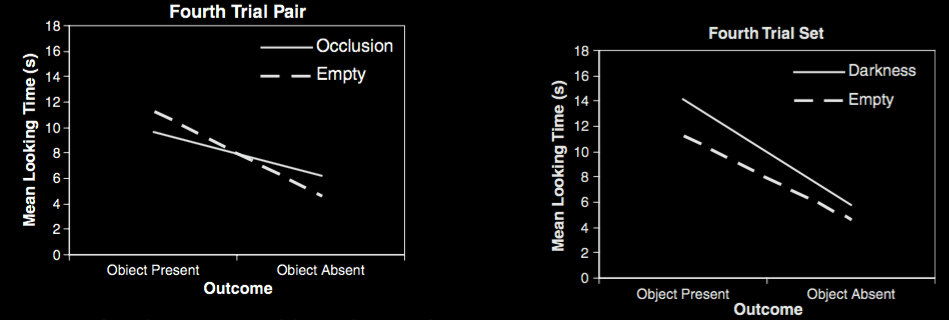

| occlusion | endarkening | |

| violation-of-expectations | ✔ | ✘ |

| manual search | ✘ | ✔ |

Charles & Rivera (2009)

Charles & Rivera, figure 1 (part)

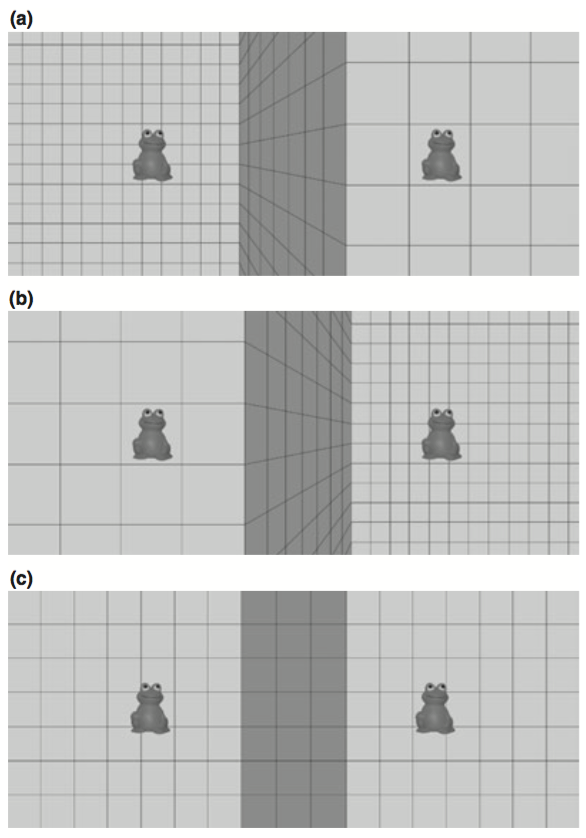

Charles & Rivera, 2009 fig 3a-c

Charles & Rivera, 2009 figs 5-6

| occlusion | endarkening | |

| violation-of-expectations | ✔ | ✘ |

| manual search | ✘ | ✔ |

Charles & Rivera (2009)

The Simple View

generates

multiple

incorrect predictions.

Like Knowledge and Like Not Knowledge (SHORTENED)

a problem

Three Questions

1. How do four-month-old infants model physical objects?

2. What is the relation between the model and the infants?

3. What is the relation between the model and the things modelled (physical objects)?

Four-month-olds can act (e.g. look, and reach) for the reason that this object is there.

Four-month-olds cannot believe, nor know, that this object is there.

Uncomplicated Account of Minds and Actions

For any given proposition [There’s a spider behind the book] and any given human [Wy] ...

1. Either Wy knows that there’s a spider behind the book, or she does not.

2. Either Wy can act for the reason that there is, or seems to be, a spider behind the book (where this is her reason for acting), or else she cannot.

3. The first alternatives of (1) and (2) are either both true or both false.

generality of the problem

| domain | evidence for knowledge in infancy | evidence against knowledge |

| colour | categories used in learning labels & functions | failure to use colour as a dimension in ‘same as’ judgements |

| physical objects | patterns of dishabituation and anticipatory looking | unreflected in planned action (may influence online control) |

| number | --""-- | --""-- |

| syntax | anticipatory looking | [as adults] |

| minds | reflected in anticipatory looking, communication, &c | not reflected in judgements about action, desire, ... |

‘if you want to describe what is going on in the head of the child when it has a few words which it utters in appropriate situations, you will fail for lack of the right sort of words of your own.

‘We have many vocabularies for describing nature when we regard it as mindless, and we have a mentalistic vocabulary for describing thought and intentional action; what we lack is a way of describing what is in between’

(Davidson 1999, p. 11)

The Problem with the Simple View: Summary

How do humans first come to know simple facts about particular physical objects?

Three requirements

- segment objects

- represent objects as persisting (‘permanence’)

- track objects’ interactions

Principles of Object Perception

- cohesion—‘two surface points lie on the same object only if the points are linked by a path of connected surface points’

- boundedness—‘two surface points lie on distinct objects only if no path of connected surface points links them’

- rigidity—‘objects are interpreted as moving rigidly if such an interpretation exists’

- no action at a distance—‘separated objects are interpreted as moving independently of one another if such an interpretation exists’

Spelke, 1990

Three Questions

1. How do four-month-old infants model physical objects?

2. What is the relation between the model and the infants?

3. What is the relation between the model and the things modelled (physical objects)?

the Simple View

| occlusion | endarkening | |

| violation-of-expectations | ✔ | ✘ |

| manual search | ✘ | ✔ |

Charles & Rivera (2009)

The Simple View

generates

multiple

incorrect predictions.

What Is Core Knowledge?

What is core knowledge? What are core systems?

‘Just as humans are endowed with multiple, specialized perceptual systems, so we are endowed with multiple systems for representing and reasoning about entities of different kinds.’

Carey and Spelke, 1996 p. 517

‘core systems are

- largely innate

- encapsulated

- unchanging

- arising from phylogenetically old systems

- built upon the output of innate perceptual analyzers’

(Carey and Spelke 1996: 520)

representational format: iconic (Carey 2009)

Why postulate core knowledge?

The Simple View

The Core Knowledge View

| domain | evidence for knowledge in infancy | evidence against knowledge |

| colour | categories used in learning labels & functions | failure to use colour as a dimension in ‘same as’ judgements |

| physical objects | patterns of dishabituation and anticipatory looking | unreflected in planned action (may influence online control) |

| number | --""-- | --""-- |

| syntax | anticipatory looking | [as adults] |

| minds | reflected in anticipatory looking, communication, &c | not reflected in judgements about action, desire, ... |

Why postulate core knowledge?

The Simple View

The Core Knowledge View

Objections to Core Knowledge

‘Just as humans are endowed with multiple, specialized perceptual systems, so we are endowed with multiple systems for representing and reasoning about entities of different kinds.’

Carey and Spelke, 1996 p. 517

‘core systems are

- largely innate

- encapsulated

- unchanging

- arising from phylogenetically old systems

- built upon the output of innate perceptual analyzers’

(Carey and Spelke 1996: 520)

representational format: iconic (Carey 2009)

multiple definitions

‘there is a paucity of … data to suggest that they are the only or the best way of carving up the processing,

‘and it seems doubtful that the often long lists of correlated attributes should come as a package’

Adolphs (2010 p. 759)

‘we wonder whether the dichotomous characteristics used to define the two-system models are … perfectly correlated …

[and] whether a hybrid system that combines characteristics from both systems could not be … viable’

Keren and Schul (2009, p. 537)

‘the process architecture of social cognition is still very much in need of a detailed theory’

Adolphs (2010 p. 759)

Is definition by listing features (a) justified, and is it (b) compatible with the claim that core knowledge is explanatory?

Why do we need a notion like core knowledge?

| domain | evidence for knowledge in infancy | evidence against knowledge |

| colour | categories used in learning labels & functions | failure to use colour as a dimension in ‘same as’ judgements |

| physical objects | patterns of dishabituation and anticipatory looking | unreflected in planned action (may influence online control) |

| number | --""-- | --""-- |

| syntax | anticipatory looking | [as adults] |

| minds | reflected in anticipatory looking, communication, &c | not reflected in judgements about action, desire, ... |

| occlusion | endarkening | |

| violation-of-expectations | ✔ | ✘ |

| manual search | ✘ | ✔ |

Charles & Rivera (2009)

If this is what core knowledge is for, what features must core knowledge have?

‘Just as humans are endowed with multiple, specialized perceptual systems, so we are endowed with multiple systems for representing and reasoning about entities of different kinds.’

Carey and Spelke, 1996 p. 517

‘core systems are

- largely innate

- encapsulated

- unchanging

- arising from phylogenetically old systems

- built upon the output of innate perceptual analyzers’

(Carey and Spelke 1996: 520)

representational format: iconic (Carey 2009)

If this is what core knowledge is for, what features must core knowledge have?

not being knowledge

objections to the Core Knowledge View:

- multiple definitions

- justification for definition by list-of-features

- definition by list-of-features rules out explanation

- mismatch of definition to application

The Core Knowledge View

generates

no

relevant predictions.

Core System vs Module

core system = module?

‘In Fodor’s (1983) terms, visual tracking and preferential looking each may depend on modular mechanisms.’

Spelke et al 1995, p. 137

Modules

- they are ‘the psychological systems whose operations present the world to thought’;

- they ‘constitute a natural kind’; and

- there is ‘a cluster of properties that they have in common … [they are] domain-specific computational systems characterized by informational encapsulation, high-speed, restricted access, neural specificity, and the rest’ (Fodor 1983: 101)

- domain specificity

modules deal with ‘eccentric’ bodies of knowledge

- limited accessibility

representations in modules are not usually inferentially integrated with knowledge

- information encapsulation

roughly, modules are unaffected by general knowledge or representations in other modules

For something to be informationally encapsulated is, roughly, for its operation to be unaffected by the mere existence of general knowledge or representations stored in other modules (Fodor 1998b: 127) - innateness

roughly, the information and operations of a module not straightforwardly consequences of learning

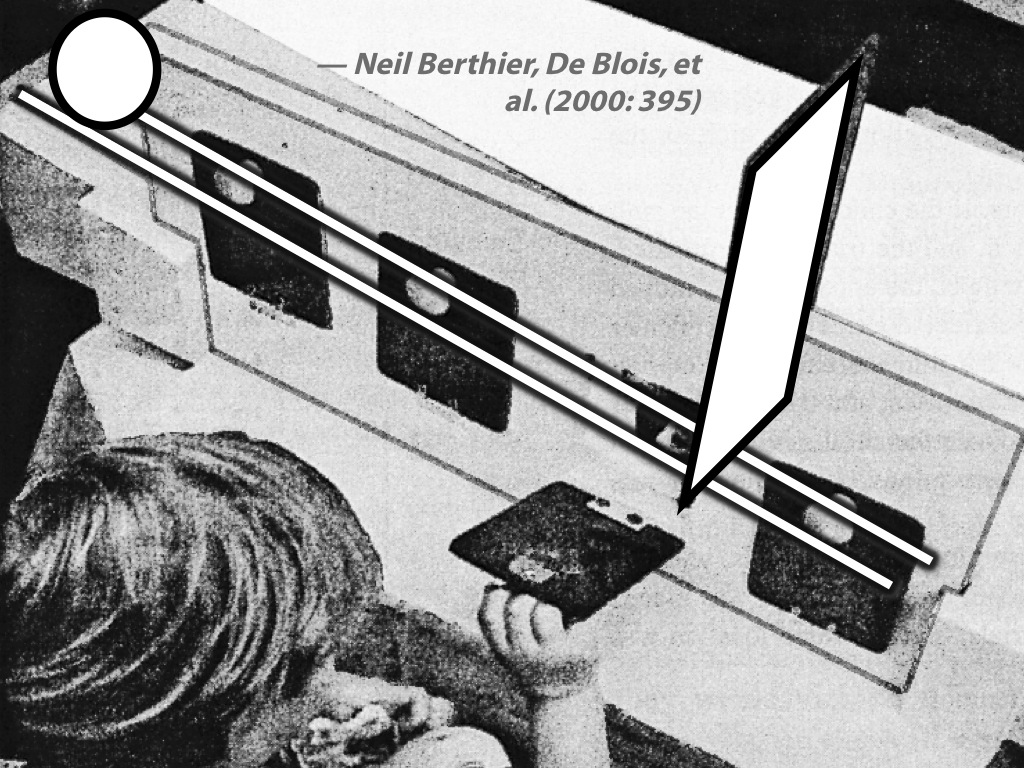

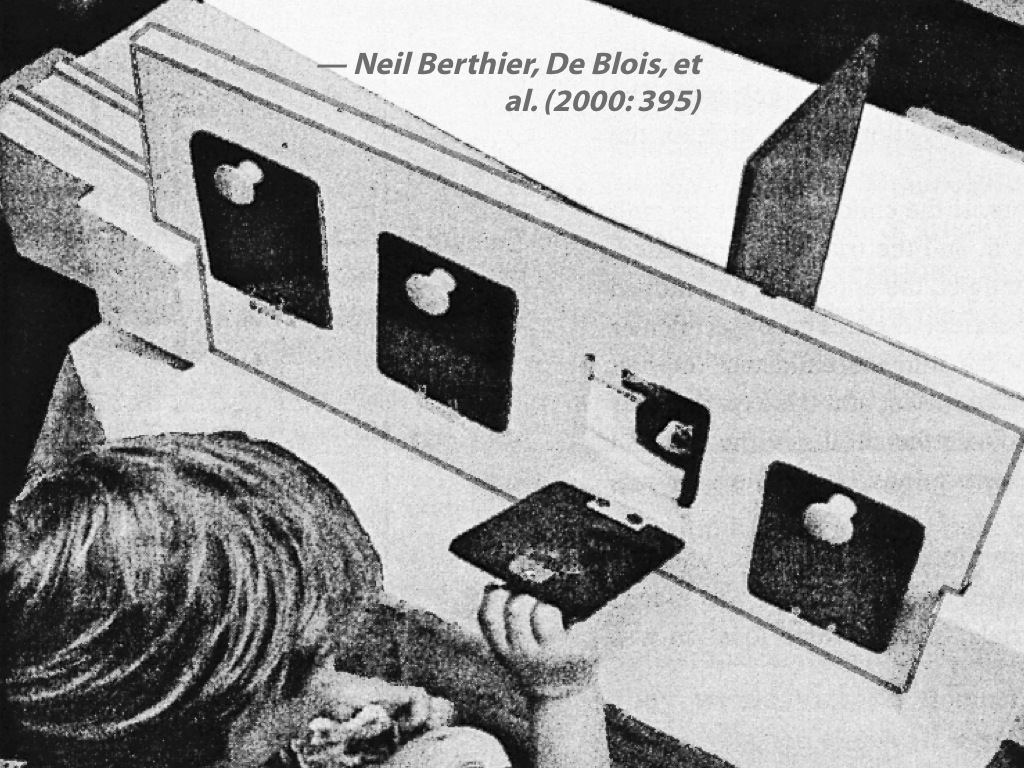

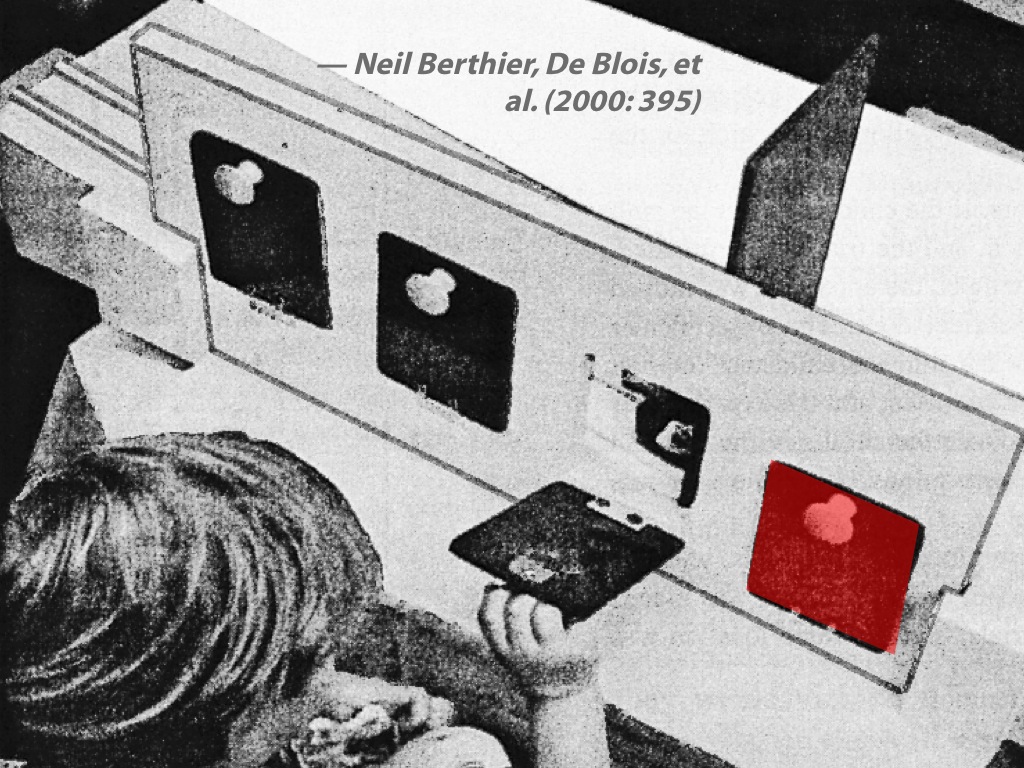

maximum grip aperture

(source: Jeannerod 2009, figure 10.1)

Glover (2002, figure 1a)

van Wermeskerken et al 2013, figure 1

van Wermeskerken et al 2013, figure 2

- domain specificity

modules deal with ‘eccentric’ bodies of knowledge

- limited accessibility

representations in modules are not usually inferentially integrated with knowledge

- information encapsulation

roughly, modules are unaffected by general knowledge or representations in other modules

For something to be informationally encapsulated is, roughly, for its operation to be unaffected by the mere existence of general knowledge or representations stored in other modules (Fodor 1998b: 127) - innateness

roughly, the information and operations of a module not straightforwardly consequences of learning

‘core systems are

- largely innate,

- encapsulated, and

- unchanging,

- arising from phylogenetically old systems

- built upon the output of innate perceptual analyzers’

(Carey and Spelke 1996: 520)

Modules are ‘the psychological systems whose operations present the world to thought’; they ‘constitute a natural kind’; and there is ‘a cluster of properties that they have in common’

- innateness

- information encapsulation

- domain specificity

- limited accessibility

- ...

core system = module ?

Will the notion of modularity help us in meeting the objections to the Core Knowledge View?

- multiple definitions

- justification for definition by list-of-features

- definition by list-of-features rules out explanation

- mismatch of definition to application

Modules

- they are ‘the psychological systems whose operations present the world to thought’;

- they ‘constitute a natural kind’; and

- there is ‘a cluster of properties that they have in common … [they are] domain-specific computational systems characterized by informational encapsulation, high-speed, restricted access, neural specificity, and the rest’ (Fodor 1983: 101)

Will the notion of modularity help us in meeting the objections to the Core Knowledge View?

- multiple definitions

- justification for definition by list-of-features

- definition by list-of-features rules out explanation

- mismatch of definition to application

Modules

- they are ‘the psychological systems whose operations present the world to thought’;

- they ‘constitute a natural kind’; and

- there is ‘a cluster of properties that they have in common … [they are] domain-specific computational systems characterized by informational encapsulation, high-speed, restricted access, neural specificity, and the rest’ (Fodor 1983: 101)

Will the notion of modularity help us in meeting the objections to the Core Knowledge View?

- multiple definitions

- justification for definition by list-of-features

- definition by list-of-features rules out explanation

- mismatch of definition to application

Spelke et al 1992, figure 2

Hood et al 2003, figure 1

| occlusion | endarkening | |

| violation-of-expectations | ✔ | ✘ |

| manual search | ✘ | ✔ |

Charles & Rivera (2009)

- domain specificity

modules deal with ‘eccentric’ bodies of knowledge

- limited accessibility

representations in modules are not usually inferentially integrated with knowledge

- information encapsulation

roughly, modules are unaffected by general knowledge or representations in other modules

For something to be informationally encapsulated is, roughly, for its operation to be unaffected by the mere existence of general knowledge or representations stored in other modules (Fodor 1998b: 127) - innateness

roughly, the information and operations of a module not straightforwardly consequences of learning

core system = module

Some, not all, objections to the Core Knowledge View overcome:

- multiple definitions

- justification for definition by list-of-features

- definition by list-of-features rules out explanation

- mismatch of definition to application

The Core Knowledge View

generates

no

relevant predictions.